On Wednesday evening of May 23rd, Li Shuangfeng, head of research and development of TensorFlow China and technical director of Google search architecture, was invited to participate in the “Artificial Intelligence Frontier and Industry Trends†Symposium of Peking University, sharing the development and application of deep learning, TensorFlow from research to practice Related content.

Thanks to Yu Jingxiang and Zhang Kang, who are the public names of the qubits under the quorum of Peking University's "Artificial Intelligence Frontier and Industry Trends" series of symposium media, they recorded the event. Speaker Li Shuangfeng also participated in the joint writing and revision of this article.

Guest Profile

Guest speaker: Li Shuangfeng, Director of China R&D, TensorFlow, and Technical Director of Google Search Architecture. One of Google's earliest engineers in China, currently leads several projects involving artificial intelligence, search architecture and mobile applications at Google. He is responsible for the TensorFlow China R&D team and overall promotes the development of TensorFlow in China.

Text Record

Solve the most challenging problems with deep learning

Deep learning has revolutionized machine learning. We have seen a rapid increase in the popularity of the term "deep learning" in recent years.

The number of machine learning papers on arXiv has grown dramatically, and the growth rate has actually caught up with Moore's Law.

Let's look at an example of image classification: give a picture and identify whether the picture is a cat or a dog. It is a multi-layer neural network structure with many parameters. After a lot of training, it can be identified as a cat.

Deep learning can not only solve the problem of image recognition, it has a very strong learning ability. Deep neural networks are likened to a function that is very powerful and can handle a variety of inputs and outputs, and deep learning algorithms can learn this function automatically.

If you enter a photo and output a label for the image, say "Lion", this is a problem with image classification.

If the input is a piece of audio, the output is a piece of text, which is the problem of speech recognition.

If the input is English and the output is French, this is the problem of machine translation.

Further: Enter a picture and output a description of the text. This is the problem of reading pictures.

Solving multiple problems in a similar and simple way is something that industry is particularly fond of, as they greatly reduce engineering complexity.

Deep learning is not entirely new, but why has it made a breakthrough in the past few years?

We see two lines: one based on deep neural network solutions and one based on other machine learning methods. Before the 1980s and 1990s, neural networks had already emerged, but that time was limited by the computing power. The model was small in size, so it was not as good as some other machine learning methods that were optimized, and it was difficult to solve the real situation. The large-scale problem.

With the increase of computing power, we can see that the accuracy of deep learning to solve problems has surpassed other machine learning methods.

Taking the image recognition problem as an example, in 2011, its error rate was 26%, and only 5% of people, so this time there is a very large distance from the utility. As of 2016, its error rate has been reduced to about 3%, and deep learning has shown a very amazing ability in this field. That is why deep learning has attracted a great deal of attention in the field of image recognition.

Let us look at how deep learning can help solve major challenges in the engineering community.

In 2008, the American Academy of Engineering released a series of major challenges in the 21st century, including: Cheap solar energy, pharmaceutical engineering optimization, virtual reality, and personalized learning.

We may also be able to add two: cross-language free exchange, more general artificial intelligence system. Back in 2008, the improvement of machine translation met a bottleneck, and everyone also thought that AI must defeat Go master and it will take many years.

Looking back, with the development of deep learning, there have been some major breakthroughs in many areas, including health care and language exchange. Below we take a closer look at how deep learning can help solve these major challenges.

Improve urban infrastructure

Traffic is a problem that faces major challenges. Google’s parent company, Alphabet, has a company called Waymo that focuses on autonomous driving. They have done a lot of real road testing and testing of the simulated environment. Their car can identify various moving objects on the road, as well as traffic lights.

We have found that autopilot is getting closer and closer to real applications. With drones, there will be a huge breakthrough in urban infrastructure.

Cross-language communication and information flow

Over the past ten years, Google’s work in the field of machine translation has taken the lead in the industry, but previously it was based on phrase statistics, and the quality improvement encountered a bottleneck.

Over the past two years, Google has introduced Neural Network Based Machine Translation Technology (GNMT), which has greatly improved the quality of translation. In quality assessment of multiple languages, the average quality has improved by 50% to over 80%, which exceeds the work of the past decade. In the translation of several languages, machine translation is close to the human level. This is an important breakthrough in promoting human communication and exchanges.

More importantly, Google not only published the technical papers, but also opened the source code based on TensorFlow. Developers use this code is very simple, one or two hundred lines of code can do something similar to GNMT's neural network based machine translation system.

This allows some small companies to also have the ability to make a good machine translation system. For example, a European company, formerly a traditional translation company, has a lot of translation data. Based on these open source systems, very good results will soon be made.

There are some very interesting features of machine translation based on neural networks. For example, when we translate English into Japanese and then translate it back into English, the traditional way may appear at the stage of translation back to English. The same, but the translation based on neural networks can guarantee partial reduction.

Another significance is in engineering. From the engineering point of view, it is hoped to solve complex problems with the simplest possible solution; rather than designing different systems and models for the translation of each language pair. From this perspective, based on neural network machine translation can bring significant advantages.

For example, suppose there is a lot of training data. They are pair-pairs. There is a correspondence from English to Korean. We have done some encoding properly, that is, we have marked the language before translation. Then, the same model will work in solving the translation between different languages, so that the language can be implemented in a unified and simple way.

This is especially valuable for Google because our goal is to translate between more than one hundred pairs of languages. This is a very complex issue that is very cumbersome for engineers. Using the same neural network-based model to translate different languages ​​makes the workload on the project much simpler.

In addition: Suppose we learn English to Korean translation and we also learn the translation from English to Japanese. Then based on the neural network translation model, we can automatically learn the translation from Japanese to Korean, which also proves the neural network. Translation has a stronger ability to learn.

AI and Medical

Health care is an important direction for Google AI research, because we believe that the medical field is a very important scene for AI applications and it also has far-reaching significance for the benefit of mankind.

For example, AI detects eye diseases: the left side is a normal retina photo, and the right side is a picture of diabetic retinopathy, which may lead to blindness in diabetic patients. If we can find these lesions as early as possible, we can use cheaper drugs for treatment.

Our research published in an authoritative American medical journal showed that the accuracy of AI in identifying these diseased retinal pictures exceeds the average level of ophthalmologists.

Image recognition is a class of problems, including the issue of retinal photo-monitoring just mentioned, as well as the identification of X-rays.

The harder question is whether it is possible to make predictions on the entire information based on known personal medical information, such as predicting the future need to stay in hospital for a few days and what drugs to take. This is a difficult problem to diagnose. Google has cooperated with medical institutions in several schools, including Stanford Medical School, and has carried out related work, and has initially achieved good predictive results.

Scientific development

The next step is to look at the development of science.

Tools play an important role in the development of human history. For example, the important basis for distinguishing between the Stone Age and the Bronze Age was the tools used by humans. Today's scientific research will do a lot of experiments. We have also produced a lot of experimental instruments.

However, are there tools that can help speed up the process of scientific discovery?

This is an important reason for us to launch an open source machine learning platform such as TensorFlow. We hope it can greatly promote the development of deep learning and promote the use of deep learning technology to solve some of the major scientific findings.

TensorFlow's goal is to become a machine learning platform that everyone can use, to help machine learning researchers and developers, to express their own ideas, to conduct exploratory research, to build their own systems, to implement AI applications based on specific scenarios And products. With a common platform, we can better promote industrial exchanges and promote industry innovation. Google uses TensorFlow on a large scale for research and products, and the industry can be based on Google’s practices and experiences.

At about the end of 2015, we have open-sourced TensorFlow, which has been growing very fast over the past two years. Basically, now we have a new release in the first two months. For example, we have added more platform capabilities, more tools, and simpler APIs.

As of today, there are more than 11 million TensorFlow downloads worldwide, which also illustrates the enthusiasm of global developers.

Deep learning has different models. How to better train the model parameters requires a lot of machine learning experience. This is still a big challenge for ordinary companies. AutoML is a very important topic, and its basic idea is to be able to do machine learning more automatically. Google has a related work called Learning to Learn, which is making good progress.

Today, we are now doing machine learning solutions that require machine learning experts, data, and lots of calculations.

Solution = Machine Learning Expert + Data + Calculation

Machine learning experts need a lot of time to develop. We begin to think, can we allow more computing power to solve the problem of talent shortage? Then:

Solution = Data + 100 Times Calculation

We use the reinforcement learning method to search the neural network structure: generate some models with a certain probability, train for several hours, then evaluate the generated models on the test set, and use the loss of the model as a signal to strengthen learning, and promote Choose a better model at one iteration.

This figure is a model structure found through a neural network search. The model structure looks very complicated, making it difficult to understand. It is not so hierarchical and not intuitive as a human-designed model. However, the actual test results are very good, almost better than the current models found by humans.

Machine learning experts have done a lot of research in the past for many years and have manually designed different sophisticated models that balance the computational complexity and model accuracy.

If we are pursuing higher precision, then the amount of computation is greater, such as ResNet; if the model is relatively simple, such as Google's MobileNet, the accuracy will be lower, but the amount of calculation is small, it is suitable for use on mobile devices; in the middle there is a series of calculations With a moderately accurate model. During these years of development, top machine learning experts have been constantly optimizing the structure based on previous achievements.

The new model obtained through AutoML is better than human's many years of research results. With the same accuracy, the calculation can be made smaller, and the accuracy is better with the same amount of calculation. The model that Google learned through AutoML has also been announced, called NASNet.

This is an inspiring opening, let us see the great prospects of AutoML.

The work of AutoML has also brought greater challenges to computing. However, there is still a lot of room for improvement in calculations. We can do some customized optimization for deep learning.

For example, the calculations on traditional CPUs are precise, and when learning deeply, we can discard some precision requirements and make the calculations faster.

On the other hand, we have found that neural network operations are mainly matrix operations, so that special hardware can be designed to accelerate matrix operations.

For example, Google specifically designed a new hardware TPU for deep learning. The first generation of TPU can only do some reasoning work. The second generation can both reason and train. The third generation of TPU launched by Google I/O in May this year is 8 times faster than the second generation. The computing power is constantly improving.

We also open the capabilities of TPU through the Google Cloud Platform for use by industry and academia. In particular, we have opened TensorFlow Research Cloud, a number of TPUs, which are free and open for top researchers to jointly promote open machine learning research. Everyone can apply through g.co/tpusignup.

Summarizing the above, deep neural networks and machine learning have brought a very big breakthrough. We can consider how to use deep neural networks to help solve some of the world's major challenges.

TensorFlow based applications

In the second part, let's talk about the application based on TensorFlow.

One of the major breakthroughs in the AI ​​field is that AlphaGo beats the top human players in the game. Before that, it was widely believed that it would take many years. DeepMind's AlphaGo is also based on TensorFlow. Earlier versions used a large number of TPUs and required a lot of calculations.

The initial version of AlphaGo required human experience, such as taking the history of Go chess as input, but the final AlphaGo Zero does not require human experience to learn.

AlphaGo's work also published papers. We saw that domestic companies based on public papers based on TensorFlow quickly recreated the high-level Go system.

This is a good illustration of the significance of TensorFlow. With such an open platform, researchers and developers around the world can easily communicate, quickly improve the level of machine learning, and be able to quickly develop new ones based on their outstanding achievements. Products and systems.

Autopilot is another example of using TensorFlow to automatically identify moving people and objects, including traffic lights.

In the history of scientific development, astronomy has many very challenging issues. How to find interesting signals in the vast sky, such as finding a planet like the Earth, and turning around the sun like a star, this is a very challenging matter.

Astronomers have used TensorFlow-based deep learning technology to search for a number of Earth-like planets in a large number of signals in space, called Kepler-90i, which is the eighth one ever discovered.

In agriculture, the farms in the Netherlands monitor the behavioral and physical data of dairy cows and use TensorFlow to analyze the health status of dairy cows, such as whether they are exercising or not.

Foresters in the Amazon jungle in Brazil use TensorFlow to identify sounds in the jungle to determine if there are any rogues.

In Africa, developers use TensorFlow to create a mobile application that determines whether a plant is sick or not. As long as the plants are photographed, they can be identified.

Google has open-sourced the Magenta project based on TensorFlow. One feature is the ability to automatically generate music. You enter a note and the program can suggest the next note.

In the field of art and culture, you can take a photo and the program can find art photos similar to yours.

Google Translate can translate in real time without a network connection. There is such a box with “Milk†printed on it. As long as you open Google Translate and align it with your mobile phone, Google Translate will automatically recognize the text, automatically translate “milkâ€, and paste the translation result on the original photo. . Such examples are very useful for foreign tourists.

TensorFlow is used by Google’s large number of products. For example, in terms of speech, including language recognition and synthesis, such as DeepNetd's speech synthesis algorithm WaveNet, the effect of synthesis is very good. For human-machine conversations, Google I/O released a Demo called AI, called Google Duplex.

In visual terms, Google Photos can automatically analyze all photos, identify people and objects inside, and automatically get some labels. You can directly search for these photos without the need for manual labeling. There are Google's Pixel series mobile phones. In the photo mode, the foreground is automatically highlighted and the background is blurred.

This is the breakthrough of the products brought by machine learning.

In the field of robotics, TensorFlow can be used to allow quadruped robots to learn how to stand and balance.

Finally, TensorFlow can also be used to help optimize energy consumption in Google’s data center. When the switch for machine learning control is turned on, the energy consumption is significantly reduced, and once it is turned off, the energy consumption rises rapidly.

TensorFlow basics

In the third part, I will talk about some of the basics of TensorFlow.

TensorFlow Background

Machine learning is more and more complex, and the network constructed is becoming more and more complex. As a researcher, how to manage this complexity is a big challenge. For example, the Inception v3 model has 25 million parameters.

The more complex the model is, the higher the computational requirements are. It requires a lot of calculations. Often it is not a single computer. It requires a lot of distributed calculations. How to manage distributed computing? This is a problem.

Further, traditional machine learning operations take place on the server side. Now that more and more computing is on the mobile device or on the device side, how to manage these diverse devices and heterogeneous systems is a challenge.

We hope that TensorFlow can help manage these complexities, allowing researchers to focus on doing research and letting products focus on products.

TensorFlow is an open source software platform whose goal is to promote machine learning for everyone and drive machine learning.

On the one hand, TensorFlow hopes to quickly help people to try new ideas and conduct cutting-edge explorations. On the other hand, it also hopes to be very flexible. It can meet the needs of research and meet the needs of large-scale products in the industry. Faced with multiple needs, how can we all use the same framework to express our own ideas? This is an important design goal of TensorFlow.

Research may be small-scale, but when it comes to products, it may involve hundreds of servers. How to manage these distributed computing, TensorFlow needs good support.

Designers took these factors into account at the outset. The most important reason was that TensorFlow was actually designed to meet Google’s internal product requirements and experienced massive testing by Google’s large number of products and teams. Before it opened, there was an internal version called DisBelief. We summed up the experience of DisBelief, made a new version, and verified it in a large number of projects. We also did some new features based on the real needs of the product.

Google Brain has a lot of researchers who are constantly publishing articles and their research work is also translated into products. Everyone expresses in a language like TensorFlow, which greatly promotes the conversion of research results.

To date, the TensorFlow project on GitHub has been submitted more than 30,000 times, more than 1,400 contributors, and more than 6,900 pull requests.

TensorFlow architecture

TensorFlow provides a complete set of machine learning tools. Let's take a look at the architecture of TensorFlow as a whole.

TensorFlow has a distributed execution engine that allows TensorFlow programs to run on different hardware platforms such as CPU, GPU, TPU, mobile hardware such as Android and iOS, and various heterogeneous hardware.

Above the execution engine, there are different front-end language support, the most commonly used is Python, also supports Java, C++, and so on.

On the front end, a rich set of machine learning toolkits are provided. In addition to the neural network support we all know, there are decision trees, SVMs, probabilistic methods, random forests, and so on. Many are commonly used tools in various machine learning contests.

TensorFlow is very flexible. It has some high-level APIs and is easy to use. It also has some low-level APIs to facilitate the construction of some complex neural networks. For example, you can define networks based on some basic operators.

Keras is a high-level API that defines neural networks and is popular in the community. TensorFlow has very good support for Keras.

Further, we also packaged the Estimator series of APIs. You can customize training and evaluation functions. These Estimators can be efficiently distributed and have good integration with TensorBoard and TensorFlow Serving.

At the top level, there are a number of prearranged Estimators out of the box.

TensorFlow provides a comprehensive tool chain, such as TensorBoard, which makes it very easy for you to show Embedding, present complex model structures at multiple levels, and display performance data during machine learning.

It supports many mobile platforms such as iOS, Android, and Raspberry Pi and other embedded platforms.

Supported languages ​​include Python, C++, Java, Go, R and other languages. Recently, we also released support for JavaScript and Swift.

Many schools and institutions have released TensorFlow courses. For example, Peking University, China University of Science and Technology and other schools have established TensorFlow related courses, top foreign universities such as Stanford, Berkeley, etc., and online education institutions such as Udacity, Coursera, and DeepLearning.ai have TensorFlow courses. We also support the Ministry of Education's industry-university cooperation project and support the establishment of machine learning courses in universities. There will be more and more original courses based on TensorFlow in China.

TensorFlow APIs

Let's talk about the TensorFlow API.

TensorFlow helps users define calculation graphs. Graphs represent calculations. Each node of a graph represents a calculation or state, and calculations can run on any device. The data flows with the edges of the graph. Graphs can be defined in different programming languages ​​such as Python, and this graph can be compiled and optimized. With this design, the definition of the figure can be separated from the actual calculation process.

TensorFlow allows you to express linear regression very easily. You can use LinearRegressor, which is an encapsulated Estimator. Deep neural networks can also be easily expressed. For example, using DNNClassifier, you only need to specify the number of nodes in each hidden layer.

TensorFlow can automatically perform a gradient descent process and implement a back propagation algorithm. And these calculations can be distributed across multiple devices so that the execution of the graph is a distributed process.

Tf.layers is another type of API that can correspond to the concept of a neural network layer, such as a CNN network. You have multiple CONV layers and multiple MAX POOLING layers. Each layer has a corresponding tf.layers.* Functions that make it easy for you to organize multiple layers. These encapsulated layers contain some of the best engineering practices.

Tf.keras

Further, tf.keras is a very popular API in the community. For example, you want to construct a toy program that automatically understands video and answers questions. You can ask: What is this girl doing? Program answer: packaged. Q: What color T-shirt does this girl wear? The program answers: black.

You may construct a network to implement: on the left is video processing logic, use InceptionV3 to identify photos, then add TimeDistributed layer to process video information, above is LSTM layer; on the right is Embedding to handle input problems, then add LSTM , Then merge the two networks, plus two layers of Dense. This can achieve a certain smart program, is it amazing?

Using tf.keras, you can use the dozens of lines of code to complete the core code logic. For example, using InceptionV3 requires only one line of code.

Eager Execution (Dynamic Graph Support)

Further, in addition to the static graphs mentioned earlier, we also have dynamic graph support called Eager Execution. It can reduce some redundant code, make your program easier, and immediately report an error.

Of course, static graphs have its advantages. For example, it allows us to do a lot of optimization in advance, whether it is based on graph optimization or compiler optimization; it can be deployed on non-Python servers or mobile phones; it can also be distributed on a large scale.

The advantage of immediate execution is that it allows you to quickly iterate and facilitate debugging.

The good news is that in TensorFlow, immediate execution and static graph execution can be easily converted to balance efficiency and ease of use.

TensorFlow's advanced knowledge

In the fourth part, let's talk about advanced knowledge of TensorFlow.

TensorFlow Lite

TensorFlow Lite is a lightweight machine learning library specifically designed for mobile or embedded devices that is smaller and faster. We provide tools to easily convert TensorFlow models to TensorFlow Lite format models, and then on the mobile side, provide a TensorFlow Lite interpreter to execute these models.

On Android, we used Android's NN API to take advantage of hardware acceleration. TensorFlow Lite also has good support for iOS.

TensorFlow.js

TensorFlow.js is the latest release of the JavaScript-based TensorFlow support library, which can use browser features such as WebGL to speed up calculations. This means that the TensorFlow program can be further run in different environments, allowing AI to be ubiquitous.

For example, we can use TensorFlow.js to implement the Pac-Man game. The computer camera captures your head and controls Pac-Man's movement. This all runs in the browser.

Distributed TensorFlow

TensorFlow's distributed execution is very important for large-scale models. TensorFlow can easily implement data parallelism. For example: You can write a Cluster description file, assign which are the computing resources "worker", and which are the parameters of the server "ps", so that we can allocate these calculations on different devices when we define the map. Perform distributed execution.

Further, Google has supported the open source Kubernetes project to manage large-scale computing resources. It has been widely adopted by the industry, especially some cloud platforms. In order to better let machine learning run on large-scale clusters, Google also took the lead in promoting KubeFlow's project. Everyone can pay attention.

In terms of Benchmarks, Google also disclosed the testing process. We can see that the TensorFlow system is very scalable, and basically the number and capability of machines are linearly related.

In the last month, the company also released a MLPerf, so that everyone can publicly compare the performance of machine learning, which can promote the development of the industry.

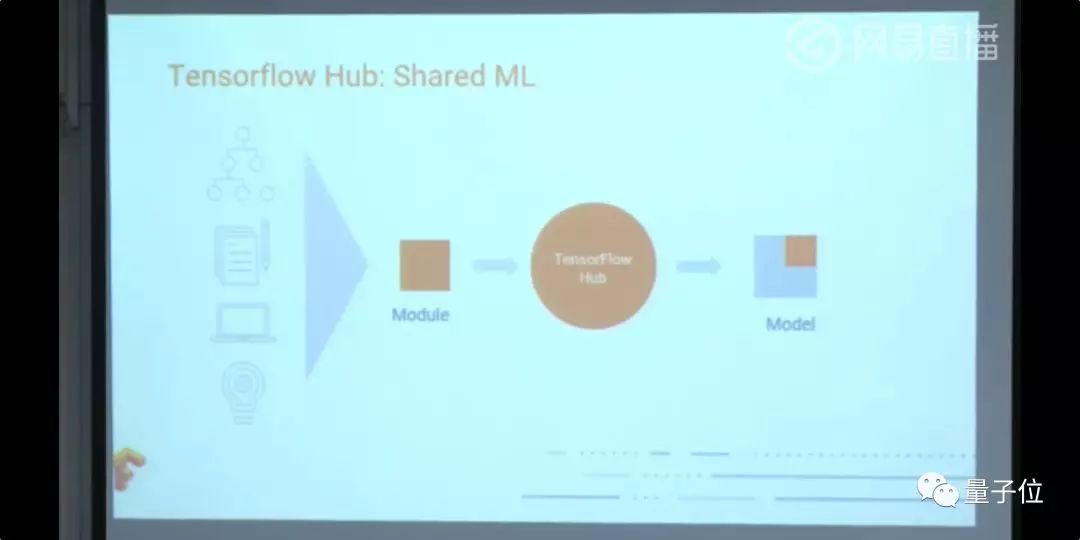

TF Hub

To better promote model sharing, we have released the TensorFlow Hub (TF Hub). For example, a person designing a machine learning model can find different models from GitHub and combine them to create more powerful capabilities.

We can see that a module contains trained weights and graphs. This is a simple model that we can assemble, reuse, or retrain.

At the moment we have already published a lot of models, including images, language related, and so on, which all require a lot of GPU training to get. Developers can do some migration learning on these foundations. With a small price, they can use existing models to help solve their own problems.

Tf.data

The other is a technique for improving machine learning called tf.data. For example, on the CPU, the training speed is a big limit, but when we add the GPU, the training speed is greatly improved, but the CPU input becomes a bottleneck. The data needs to be converted so that it can be loaded and trained.

As you know, one of the major problems in the study of computer architecture is how to speed up parallelization, and improving the operating efficiency of CPUs and GPUs is an important issue in improving machine learning systems. Tf.data provides a series of tools to help developers better input and process data in parallel.

Further, there are many Packages/Features related to TensorFlow training, such as Estimators, Feature Engineering, XLA, TensorFlow Serving, etc. These can be easily applied in many industries.

TFX

When constructing a real end-to-end machine learning system, in addition to the core machine learning algorithms discussed above, there are a large number of related systems that need to be built, such as data acquisition, data analysis, model deployment, machine learning resource management, etc. problem.

Google internally has a large-scale system TSF (TensorFlow Extended), which is a large-scale machine learning platform based on TensorFlow. Related papers have been published in KDD in 2017. At present, we have open-sourced some important components, and more parts of open source are in progress. Friends of the industry can pay attention.

TensorFlow Development in China

Finally, talk about the development of TensorFlow in China.

Basically, we see that most of China's leading technology companies are using TensorFlow to solve a variety of issues, such as: advertisement recommendation, click prediction, OCR, speech recognition, natural language understanding, and so on. Including many well-known companies, such as Jingdong, Xiaomi, Netease, Sogou, 360, Sina, Lenovo, etc.; also includes a lot of startup companies, such as Zhihu, go out to ask, Yun Zhisheng, Kika, speak fluently and so on.

Jingdong uses TensorFlow for packaging OCR, applied to many fields such as image, voice and advertising, and established an efficient internal machine learning platform.

Xiaomi uses TensorFlow and uses Kubernetes to manage the cluster and build a distributed learning system to help Xiaomi's internal and ecological chain businesses.

NetEase uses TensorFlow Lite for document scanning applications and TensorFlow for machine translation.

360 used TensorFlow for short video analysis and also established a related internal machine learning platform.

Some research institutions, such as Teacher Zhu Jun of Tsinghua University, combined Bayesian networks with deep learning, and open-sourced a system based on TensorFlow, called "Abacus."

Another example is also from Tsinghua University, which has open-sourced some of its knowledge map tools based on TensorFlow.

In China, TensorFlow community activities are very rich. For example, this is the scene of the TensorFlow Developer Summit Beijing branch held at the Google Beijing office at the end of March. At 1am, Jeff Dean interacted with TensorFlow China Developer Video:

In addition, we have activities in Chongqing to support the Ministry of Education's cooperation project for the cooperation of production and education. There are hundreds of university teachers participating in the program. We hope to train more university teachers to teach machine learning.

More official Chinese documents, you can visit:

Https://tensorflow.google.cn/

Not long ago, we also open-sourced the two-day Machine Learning Accelerated Course (MLCC) used internally by Google. In the past few years, a large number of Google engineers have studied the course. Everyone can visit:

Https://developers.google.com/machine-learning/crash-course/

In addition, we recommend TensorFlow WeChat public number, we can search for "TensorFlow" on WeChat.

You can also join the TensorFlow Chinese community to learn and discuss TensorFlow technology with the industry. Visit:

Https://

The basic tensions of the TensorFlow community are: openness, transparency, and participation.

We want to be as open as possible, so we released the TensorFlow development roadmap to make technology more transparent, increase the sense of participation of community members, and jointly promote the development of technology. Tensorlow used internally by Google is also the same with the outside world, and we are constantly synchronizing every week.

In this open community, whether beginners, researchers or industrial developers, everyone can share their problems, publish their own code, data and tutorials, help other community members, organize offline technical group gatherings, etc. Wait. Further, you can apply to become a Google Developer Professional (GDE) honorary title.

Q&A

TensorFlow as a machine learning platform, which in the future development, what are the directions?

We have released the Tensorlow development roadmap that we can find on the following website:

Https://tensorflow.google.com/community/roadmap

In addition, you can also join relevant interest groups and give Tensorlow development advice.

In summary, I think there are several directions:

fast. We continue to optimize, better distributed execution, optimization for various new hardware, and faster execution on the mobile side, and so on.

Simple and usable. The pursuit of efficiency is easy to use. On the one hand, we make the high-level API more useful and make Keras API and Eager Execution better. On the other hand, we provide more toolsets and reference models that are easy to use out of the box.

Mobility. In terms of mobile, we have released TensorFlow Lite, which is capable of supporting the continuous improvement of models and performance. We have also released TensorFlow.js to further promote ubiquitous AI.

Integrity. We have released TensorFlow Hub and we hope to promote model sharing and reuse. On the other hand, the TFX system used in Google's internal mass production environment is also open source. We hope to further reduce the challenges that developers face in building an end-to-end complete machine learning system.

Promote the development of machine learning, share, accelerate research. We are looking forward to working with the TensorFlow community to collaborate with each other to solve the world’s most difficult problems, such as medical issues that are of great benefit to humanity.

Q: 基于TensorFlow 这个平å°ï¼Œæžå¤§çš„é™ä½Žäº†æ·±åº¦å¦ä¹ 的难度,那么是ä¸æ˜¯ç¨å¾®æ‡‚技术的人,ç»è¿‡ä¸€äº›å¦ä¹ 和打磨,就å¯ä»¥å€ŸåŠ©è¿™ä¸ªå¹³å°åšæ·±åº¦å¦ä¹ 或者AI相关开å‘,还是说背åŽè¿˜éœ€è¦å¾ˆå¤šå·¥ä½œæ‰èƒ½æˆä¸ºè¿™æ–¹é¢çš„人æ‰ï¼Ÿ

首先这是TensorFlow çš„è®¾è®¡ç›®æ ‡ï¼Œè®©å°½å¯èƒ½å¤šçš„人能用上深度å¦ä¹ 技术,å°è¯•æ–°çš„ç ”ç©¶æƒ³æ³•ï¼Œåšäº§å“,解决难题。

è¿™æ ·ä¸€æ–¹é¢å¯ä»¥æŽ¨åŠ¨æ·±åº¦å¦ä¹ 技术的å‘å±•ï¼ŒåŠ å¿«å¾ˆå¤šæ–¹é¢çš„AI 应用。而这些AI 应用,更需è¦æ‹¥æœ‰å¹¿é˜”背景的众多开å‘者æ¥ä¸€èµ·æŽ¨åŠ¨ã€‚

å¦ä¸€æ–¹é¢ï¼Œä»Žç®€å•åœ°ç”¨åˆ°ä¸€äº›æ¨¡åž‹ï¼Œåˆ°æ¯”较专业的深度优化,还是有ä¸å°‘è·ç¦»ã€‚å¦‚æžœä½ åªæ˜¯è¾¾åˆ°å¹³å‡æ°´å¹³ï¼Œä»˜å‡ºå¯ä»¥æ¯”较少,但è¦è¾¾åˆ°æ›´é«˜æ°´å¹³çš„çªç ´ï¼Œè¿˜éœ€è¦å¾ˆå¤šçš„ç»éªŒã€‚

大家需è¦æœ‰ä¸€ç‚¹å·¥åŒ 的精神,对问题的ç†è§£ç¨‹åº¦ï¼Œå‚数的调节,数æ®çš„清洗和处ç†ç‰ç‰ï¼Œè¿™äº›éƒ½æ˜¯éœ€è¦ç§¯ç´¯çš„。

我们希望å¯ä»¥é™ä½ŽTensorFlow 使用的难度,也æ供一系列的工具集æ¥è¾…助用户,希望å¯ä»¥è®©åˆçº§ç”¨æˆ·æ›´å¤šçš„探索应用场景,而高级用户å¯ä»¥åšæ›´å¤šçš„å‰æ²¿ç§‘æŠ€ç ”ç©¶ã€‚

Q: 深度å¦ä¹ èžå…¥Googleçš„æ¯ä¸€ä¸ªéƒ¨é—¨ï¼Œé‚£ä¹ˆæ¯ä¸€ä¸ªéƒ¨é—¨éƒ½ä¼šå› 为AI产生效应å—?有没有什么æ„想ä¸åˆ°çš„效果?

我个人的感å—æ˜¯ï¼ŒåŠ å…¥äº†æ·±åº¦å¦ä¹ ,确实带æ¥äº†å¾ˆå¤§çš„çªç ´ã€‚åƒæœºå™¨ç¿»è¯‘,è¯éŸ³ï¼Œè‡ªç„¶å¯¹è¯ï¼Œå›¾ç‰‡ç¿»è¯‘,æœç´¢æŽ’åºï¼Œå¹¿å‘Šé¢„ä¼°ç‰ç‰ï¼ŒGoogle 的众多产å“都有深度å¦ä¹ 的深刻影å“。

Q: 机器å¦ä¹ ,深度å¦ä¹ 这些年å‘展的很快,从技术到应用,在未æ¥å‡ 年在哪些地方会有一些çªç ´ï¼Ÿ

ä¸ªäººè®¤ä¸ºï¼šæ— äººé©¾é©¶é¢†åŸŸï¼ŒåŒ»ç–—è¯Šæ–æ–¹é¢ï¼Œè¿˜æœ‰ï¼Œæ¯”如è¯è¨€å¯¹è¯æ–¹é¢ï¼Œè®©äººå……满期待。我个人更期待AI能推动人类é‡å¤§é—®é¢˜ä¸Šçš„å‘展,比如农业,环境,教育,跨è¯è¨€çš„自由交æµç‰ç‰æ–¹é¢ï¼Œå¸Œæœ›å¯ä»¥å€ŸåŠ©TensorFlow 获å–çªç ´çš„契机。

Q: Google ä¸æ€Žä¹ˆå¹³è¡¡ç ”究和产å“,到底是è°åœ¨æŽ¨åŠ¨è°å¾€å‰èµ°ï¼Ÿå¤§å®¶æ˜¯åšè‡ªå·±å–œæ¬¢çš„课题呢还是由需求æ¥é©±åŠ¨åšä¸€äº›å®žç”¨çš„ç ”ç©¶ï¼Ÿ

ç ”ç©¶æ˜¯åŸºäºŽçœŸå®žçš„é—®é¢˜ï¼Œè€Œäº§å“化紧密åä½œï¼ŒæŠŠç ”ç©¶æˆæžœè½å®žåˆ°äº§å“上去,并且获得真实世界的大é‡å馈,这是一个快速è¿ä»£çš„è¿‡ç¨‹ã€‚ç ”ç©¶å’Œäº§å“是比较紧密的结åˆè¿‡ç¨‹ï¼Œå¯ä»¥ç”±çœŸå®žé—®é¢˜çš„驱动去寻找解决方案。æŸç§æ„义上,Google 的工程师,混åˆäº†ç ”究和产å“的角色,既è¦ç ”究问题,也è¦å®žçŽ°äº§å“。

Vapesoul Vape,Disposable Vape Review,Hqd Maxx Hqd Cuvie Plus,12 Fruit Flavors Disposable Vaporizer

TSVAPE Wholesale/OEM/ODM , https://www.tsecigarette.com