Editor's Note: How do you learn and practice it when you face a new concept? It takes a lot of time to learn the whole theory, master the algorithms, mathematics, assumptions, limitations, and hands-on practice behind it, or start from the simplest foundation, and solve your problems through specific projects to improve your overall grasp of it? In this series of articles, Argument will use the second method to understand machine learning from the beginning with the reader.

The first book from "Zero Learning" series from Python and R understanding and coding neural network from Analytics Vidhya blogger, India senior data science developer SUNIL RAY.

This article will focus on the basics of neural network construction, and focus on the application of the network, using Python and R language combat coding.

table of Contents

The basic working principle of neural networks

Multilayer perceptron and its basic knowledge

Detailed steps of the neural network

Visualization of neural network work processes

How to implement NN (Python) with Numpy

How to implement NN in R language

The mathematical principle of backpropagation algorithm

The basic working principle of neural networks

If you are a developer or have been involved in programming projects, you must know how to find bugs in your code. By changing the input and environment, you can test the bug location with the appropriate output, because the output change is actually a hint that tells you which module you should check, or even which line. Once you find the right one and debug it repeatedly, you will always get the desired results.

The neural network is actually the same. It usually requires several inputs, and after processing through the neurons in multiple hidden layers, it returns the result at the output layer, which is the "forward propagation" of the neural network.

After getting the output, what we need to do next is to compare the output of the neural network with the actual results. Since each neuron can increase the error in the final output, we want to minimize this loss and bring the output closer to the actual value. How to reduce the loss?

In neural networks, a common practice is to reduce the weights/weights of neurons that are more likely to cause more loss. Because this process requires returning neurons and finding the error, it is also known as "back propagation."

In order to reduce the error while performing a smaller number of iterations, the neural network also uses an algorithm called Gradient Descent. This is a basic optimization algorithm that helps developers complete tasks quickly and efficiently.

Although such a statement is too simple and simple, it is actually the basic working principle of neural networks. A simple understanding helps you to do some basic implementation in a simple way.

Multilayer perceptron and its basic knowledge

Just as atomic theory consists of a group of discrete unit atoms, the most basic unit of neural networks is the perceptron. So what is the sensor?

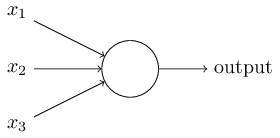

For this problem, we can understand that a perceptron is something that receives multiple inputs and produces an output. As shown below:

sensor

In the example, it has 3 inputs, but only one output, so the next logical question we generate is what is the correspondence between input and output. Let's start with some basic methods and slowly move on to more complex methods.

Here are three ways to create an input-output correspondence:

Combine the inputs directly and calculate the output based on the threshold. For example, let us set x1=0, x2=1, x3=1, and the threshold is 0. If x1+x2+x3>0, then 1 is output; otherwise, 0 is output. As you can see, the final output of the above image is 1 in this scenario.

Next, let's add weights for each input. For example, let us set the weights of the three inputs x1, x2, and x3 to w1, w2, and w3, respectively, where w1=2, w2=3, and w3=4. To calculate the output, we need to multiply the inputs by their respective weights, 2x1+3x2+4x3, and compare them to the threshold. It can be seen that x3 has a greater impact on the output than x1 and x2.

Next, let's add bias (bias, sometimes called threshold, but different from the threshold above). Each perceptron has a bias, which is actually a weighting method that reflects the flexibility of the perceptron. Bias is somewhat equivalent to the constant b in the linear equation y=ax+b, which allows the function to move up and down. If b=0, then the classification line will pass through the origin (0,0), so the fit range of the neural network will be very limited. For example, if a perceptron has two inputs, it needs 3 weights, two for the input and one for the bias. In this scenario, the linear form of the input above is w1x1 + w2x2 + w3x3 + 1 × b.

However, after doing so, the output of each layer is a linear transformation of the upper input, which is a bit boring. So people thought of developing the perceptron into something that is now called a neuron, which can use nonlinear transformations (activation functions) for input and loss.

What is an activation function?

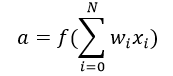

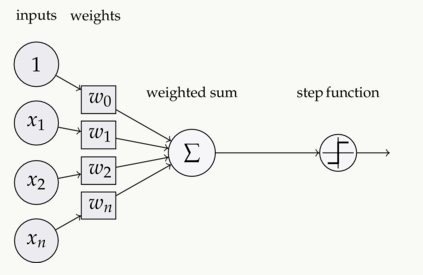

The activation function takes the sum of the weighted inputs (w1x1 + w2x2 + w3x3+1×b) as arguments and then lets the neurons derive the output values.

In the above formula, we denote the bias weight of 1 as x0 and b as w0.

Input-weighting-summation-as the actual parameter is activated by the function calculation-output

It is mainly used for nonlinear transformation, so that we can fit nonlinear assumptions and estimate complex functions. Common functions are: Sigmoid, Tanh and ReLu.

Forward Propagation, Back Propagation, and Epoch

So far, we have obtained the output from the input calculation, which is called "Forward Propagation". But what if the error between the estimated and actual values ​​of the output is too large? In fact, the working process of the neural network can be regarded as a trial and error process. We can update the previous bias and weight according to the error of the output value. This backtracking behavior is "Back Propagation".

The backpropagation algorithm (BP algorithm) is an algorithm that works by weighing the loss or error of the output layer and passing it back to the network. Its purpose is to re-adjust the weights to minimize the loss produced by each neuron, and to achieve this, the first step we have to do is to calculate the gradient (derivative) of each node based on the final output. The specific mathematical process will be discussed in detail in the last section, "The Mathematical Principles of Back Propagation Algorithms."

And this round of iteration consisting of forward propagation and back propagation is a training iteration we often say, that is, Epoch.

Multilayer perceptron

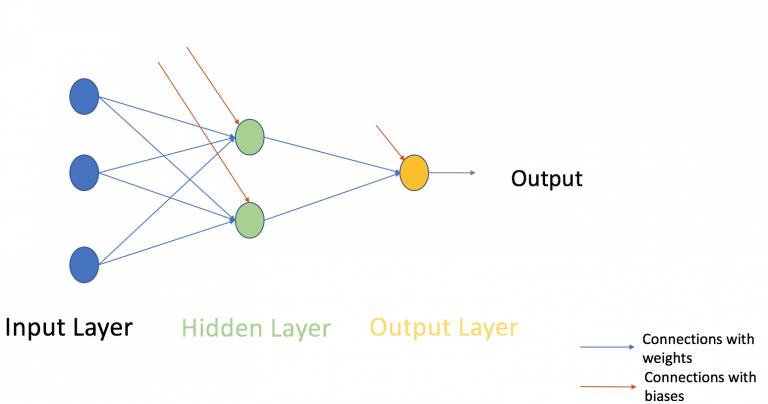

Now let's move back to the example and focus on the multilayer perceptron. As of now, we have seen only one single input layer consisting of three input nodes x1, x2, x3, and one output layer containing only a single neuron. Admittedly, if you are solving linear problems, a single-layer network can do this, but if you want to learn nonlinear functions, then we need a multi-layer perceptron (MLP), which inserts a layer between the input layer and the output layer. Hidden layer. As shown below:

The green part of the picture indicates the hidden layer. Although there is only one picture above, in fact, such a network can contain multiple hidden layers. At the same time, it should be noted that the MLP consists of at least three layers of nodes, and all layers are fully connected, that is, each node in each layer (except the input layer and the output layer) is connected to the front/back layer. Each node in .

With this in mind, we can move on to the next topic, the neural network optimization algorithm (error minimization). Here, we mainly introduce the simplest gradient drop.

Batch gradient descent and random gradient descent

Gradient descent generally has three forms: Batch Gradient Descent, Stochastic Gradient Descent, and Mini-Batch Gradient Descent. Since this article is a starting point, let's first look at the full batch gradient descent method (Full BGD) and the stochastic gradient descent method (SGD).

These two gradient descent forms use the same update algorithm, which optimizes the network by updating the weight of the MLP. The difference is that the full batch gradient descent method reduces the error by repeatedly updating the weights. It uses all the training data for each update, which takes too much time when the amount of data is large. The stochastic gradient descent rule only extracts one or more samples (not all data) to iteratively update once. Compared with the former, it has a small advantage in time consumption.

Let's take an example: Suppose now we have a dataset with 10 data points, which has two weights, w1 and w2.

Full batch gradient descent method: You need to use 10 data points to calculate the change Δw1 of the weight w1, and the change Δw2 of the weight w2, and then update w1 and w2.

Random gradient descent method: Calculate the change Δw1 of the weight w1 and the change Δw2 of the weight w2 with one data point, update w1, w2 and use them for the calculation of the second data point.

Transparent Led Film Screen P20

Since its establishment, it has been focusing on the application field of LED transparent flexible film screen. It is a high-tech enterprise integrating material development, circuit design, circuit production, product development, production, sales and service of LED transparent flexible film screen. The company is mainly engaged in LED transparent flexible film screen products, and currently launches P6, P8, P10, P20, P30, P40. Support customization to meet various application needs.China leading manufacturers and suppliers of Transparent Led Film Screen P20,Led Outdoor Advertising Screens, and we are specialize in Electronic Led Outdoor Advertising Display,Flexible Outdoor Led Mesh Screen, etc.

Transparent Led Film Screen P20,Led Outdoor Advertising Screens,Electronic Led Outdoor Advertising Display,Flexible Outdoor Led Mesh Screen

Guangdong Rayee Optoelectronic Technology Co.,Ltd. , https://www.rayeeled.com