Compared to monocular vision, the key difference between Stereo Vision is that dual cameras can be used to image the same target from different angles to obtain disparity information and estimate the target distance. In the next decade, in order to complete the evolutionary path from perception + warning to decision + execution, the Advanced Driver Assistance System (ADAS) will access more sensors for more complex calculations and higher security.

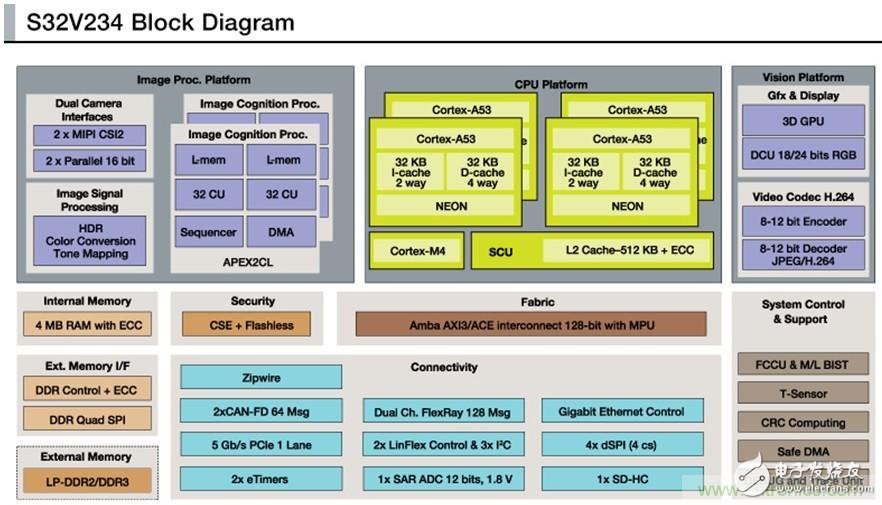

Figure 1: S32V234 structure diagram

The S32V234 uses four ARM Cortex A53s as the core CPU for higher performance per watt. In addition, the S32V234 includes an ARM Cortex M4 as an on-chip MCU for real-time control of critical IOs such as CAN-FD and supports the AutoSAR operating system.

The chip integrates two MIPI-CSI2 and two 16-bit parallel camera interfaces, as well as a Gbit Ethernet controller, providing a variety of options for image sensor input. At the same time, the chip contains a programmable image signal processing (ISP) hardware module. With the embedded ISP, the externally coupled image sensor can output raw data, which reduces material costs and saves space. In addition, the chip also contains two visual acceleration engines called APEX2CL. Each APEX2CL has 64 local computing units (CUs) with local memory and accelerates the image recognition process through SIMD/MIMD (single instruction multiple data/multiple instruction multiple data) processing. It is also worth noting that, considering the stringent requirements of the ADAS system for safety and reliability, the S32V234 incorporates such things as ECC (Error Checking and Correction), FCCU (Fault Collection and Control Unit), M/L BIST (design). A variety of security mechanisms, such as memory/logic built-in self-test, can meet the requirements of ISO26262 ASIL B~C.

Introduction to binocular visionCompared to monocular vision, the key difference between Stereo Vision is that dual cameras can be used to image the same target from different angles to obtain disparity information and estimate the target distance. Specific to visual ADAS applications, if a monocular camera is used, in order to identify targets such as pedestrians and vehicles, large-scale data acquisition and training are usually required to complete the machine learning algorithm, and it is difficult to identify irregular objects; Although the accuracy of the laser radar for ranging is high, the cost and difficulty are also high. Therefore, the biggest advantage of binocular vision is to achieve a certain accuracy of target recognition and ranging under the premise of maintaining low development cost, and to complete the ADAS function such as FCW (forward collision warning).

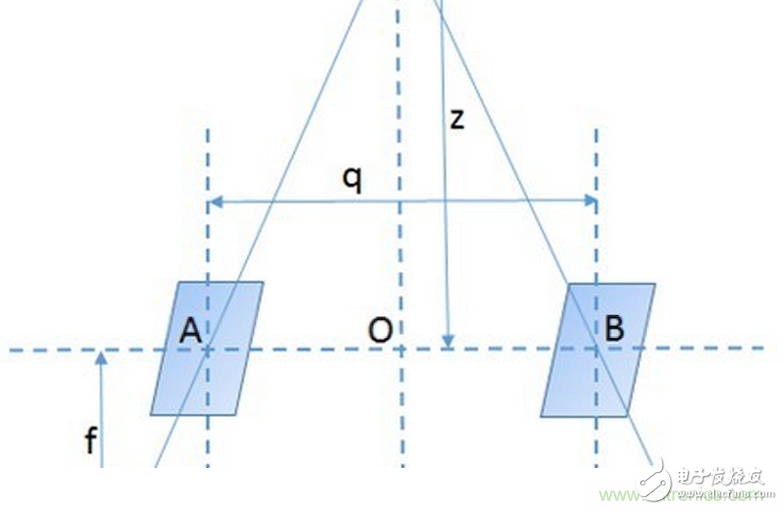

The basic principle of binocular vision ranging is not complicated. As shown in Figure 2, P is the target point. The imaging points on the left and right cameras (the centers of the lenses are A and B respectively) are E and F, respectively. The parallax of the point in the two cameras is d=EC+DF. According to the similarity between the triangle ACE and the POA and the triangle BDF and POB, the derivation can obtain d=(fq)/z, where f is the camera focal length, q is the distance between the two camera optical axes, and z is the distance from the target to the camera plane. Then, the distance z=(fq)/d, and f and q can be regarded as fixed parameters, so that the distance z can be obtained by obtaining the parallax signal d.

Figure 2: Binocular vision ranging principle

According to the principle of ranging of binocular vision, the implementation process is usually divided into five steps: camera calibration, image acquisition, image preprocessing, feature extraction and stereo matching, and 3D reconstruction. Among them, the camera calibration is to obtain the camera's internal and external parameters and distortion coefficients, etc., which can be performed offline; the synchronization of the left and right camera image acquisition, the quality and consistency of the image preprocessing, and the stereo matching (acquisition of disparity information) and 3D reconstruction (Acquisition of distance information) The huge computational complexity of the algorithm's real-time requirements poses a challenge to achieve binocular vision ADAS on embedded platforms.

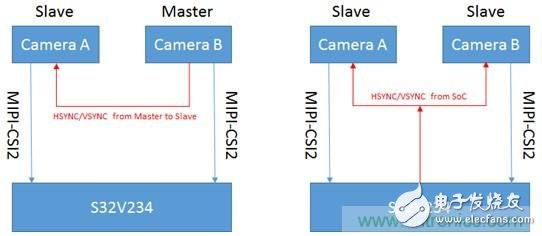

Binocular vision ADAS solution based on S32V234The S32V234 has two MIPI-CSI2 camera interfaces on the chip, each providing a maximum transfer rate of 6 Gbps, which can be used for video input of two left and right cameras. Since the two cameras input two MIPI channels separately, it is necessary to consider the synchronization problem between the two. With the cooperation of external image sensors, the S23V324 can support different synchronization methods. As shown in Figure 3, the image sensor usually has a field sync signal (VSYNC) and a line sync signal (HSYNC) for signal synchronization: when the two cameras operate in the master-slave mode, the master sends a sync signal to the slave; When the camera is operating in slave mode, the S32V234 internal timer can generate a sync signal and send it to both cameras.

Figure 3: Binocular camera synchronization scheme

After the S32V234 acquires the image signal of the external camera, it can be preprocessed by the internal ISP. The ISP module contains multiple processing units optimized for ISP functions, buffering the input signals and intermediate processing results with on-chip SRAM, and using an ARM Cortex M0+-based dedicated coprocessor to manage the timing of the ISP processing unit to implement the image. Pixel level processing of the signal. Since the ISP is located inside the chip and can be flexibly programmed, it can not only save the cost of the external ISP of the binocular camera, but also its computing resources and bandwidth can support real-time processing of dual-channel up to 1080p@30fps image signals, ensuring dual image signals. Quality and consistency.

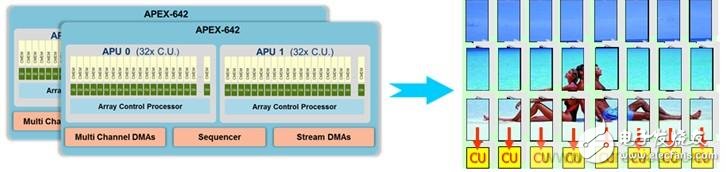

In binocular vision ADAS applications, the biggest challenge comes from the huge amount of computation required for stereo matching and 3D reconstruction of two-way images. Taking the FCW application as an example, the extraction of the disparity signal is required to have sufficient accuracy to ensure the ranging accuracy, and the processing frame rate is required to maintain a certain level to ensure the response speed of the early warning. Therefore, the embedded platform is required to have sufficient processing capability. The structure of the image acceleration engine APEX2 integrated in the S32V234 is shown in Figure 4. The parallel computing structure and dedicated DMA design ensure extremely high processing efficiency for image signals. Specifically, the ISP preprocesses the image signal and sends it to the DDR. The APEX2 engine divides the image and sends it to the local memory CMEM corresponding to each CU via a dedicated DMA, and the block matching required for stereo matching (Block Matching) Algorithms can be processed in parallel in different CUs. After processing, the data is sent back to the DDR via DMA, and further processed by the CPU (such as generating an early warning signal), or sent to a dedicated DCU (Display Control Unit) module output display.

Figure 4: APEX2 architecture and image processing diagram

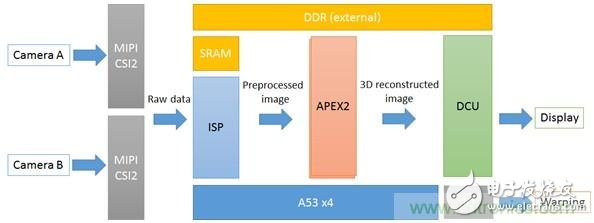

In summary, the data flow of the binocular vision application based on S32V234 is shown in FIG. 5. In this application, the data flow flows in the direction of ISP-APEX2-DCU, and A53 acts as the master CPU to complete the logic control and necessary data processing. Through this pipelined processing method, the computing resources of each part can be fully utilized and the calculation efficiency can be improved.

Figure 5: Binocular vision data stream based on S32V234

The S32V234 development board is used to build a binocular vision platform to process dual-channel 720p@30fps video signals. The output is shown in Figure 6. The distance between the target and the camera in the three images from left to right is 1m, 2m, and 3m, respectively, and the display results represent the target distance with changes in the warm and cold colors. The results show that the S32V234 can process the binocular vision signal in real time, and correctly obtain the three-dimensional ranging result. At the same time, the safety design of the chip can meet the requirements of the binocular vision ADAS system.

Figure 6: S32V234 binocular visual display results

to sum upNXP's visual ADAS dedicated chip S32V234 integrates image signal processor ISP, graphics acceleration engine APEX2, 3D GPU and other dedicated computing units to make full use of heterogeneous computing resources through a pipelined processing architecture; different computing modules for OpenCV, OpenCL The support of multiple APIs such as OpenVG enhances the portability of the algorithm; and the functional safety design conforming to the ISO26262 standard enables the chip to meet the stringent security requirements of the ADAS system. The S32V234 supports a wide range of visual ADAS and sensor data fusion solutions including binocular vision, making us a solid step on the road to driverlessness.

For Vivo Glass,Vivo V25 Pro Glass,Vivo V23 Pro Front Glass,Vivo V23 Pro Black Lcd Glass

Dongguan Jili Electronic Technology Co., Ltd. , https://www.ocasheet.com