At this year's annual I/O conference, Google made a deep impression. It not only launched a series of benchmark tests of cloud computing TPU instances based on the TPUv2 chip, but also revealed some simple details about its next-generation TPU chip, TPU3.0, and its system architecture. Paul Teich, the top technical expert and chief analyst of TIRIAS Research, recently published an article on nextplatform, revealing in-depth Google TPU3.0.

Google upgraded the TPUv2 version to TPU 3.0, but ironically, as far as we know, various details indicate that the span from TPUv2 to TPU3.0 (hereinafter referred to as TPUv3) is not that large; perhaps it is called TPUv2r5 or similar Something would be more appropriate.

If you are not familiar with TPUv2, you can learn about the evaluation of TPUv2 we did last year to increase your knowledge structure. We use Google's definition of Cloud TPU (Cloud TPU), which is a motherboard containing four TPU chips. Google's current cloud TPU test program only allows users to access a single cloud TPU. Except for its internal developers, no one else can jointly use cloud TPU in any way. We learned last year that Google has extracted cloud TPUs under its TensorFlow deep learning (DL) framework. Except for Google's internal TensorFlow development team and Google Cloud, no one has direct access to cloud TPU hardware, and may never be.

We also believe that Google has funded a huge software engineering and optimization work to achieve the deployment of its current test cloud TPU. This prompted Google to retain as many system interfaces and behaviors of TPUv2 as possible in TPUv3, namely, the hardware abstraction layer and application programming interface (API). Google did not provide any information about when to provide TPUv3 service, put it in cloud TPU or multi-rack pod configuration. It did show photos of the TPUv3-based cloud TPU board and some pod photos, and made the following statement:

The operating temperature of the TPUv3 chip is so high that Google introduced liquid cooling technology in its data center for the first time. The power of each TPUv3 pod will be eight times that of the TPUv2 pod. ) However, Google also reiterated that the clock frequency of the TPUv2 pod is 11.5 petaflops. The 8 times improvement should bring the basic frequency of the TPUv3 pod to 92.2 petaflops, but the 100 petaflops means that this is almost 9 times that of TPUv2. Google’s marketers should be rounded up, so this number may not be accurate.

POD

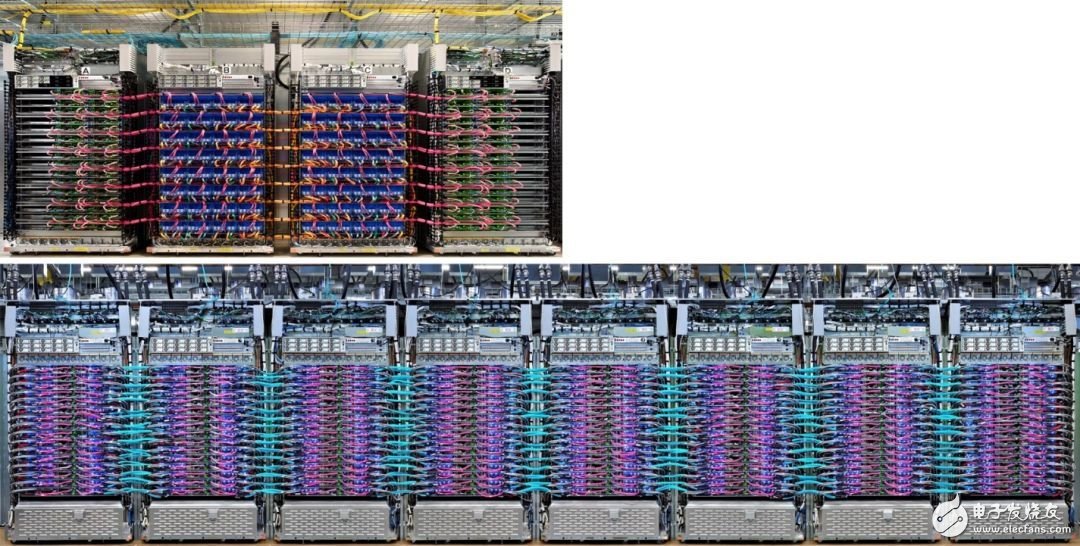

From the two complete photos of the TPUv3 pod, it is obvious that Google’s next-generation products have been upgraded:

The number of racks per pod is twice the original. The number of cloud TPUs per rack is twice the original. If there are no other changes, these two points are enough to increase the performance of the TPUv2 pod by 4 times.

pod: TPUv2 (top) and TPUv3 (bottom)

frame

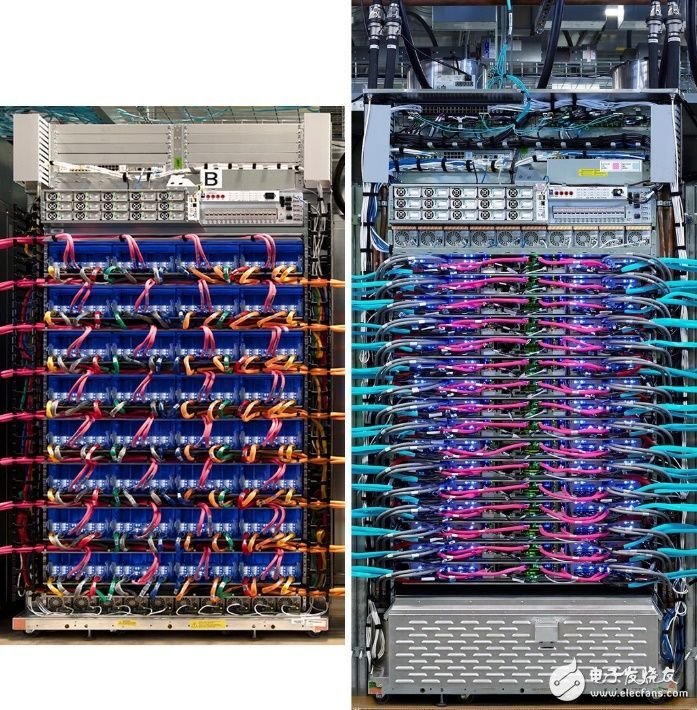

The interval between the TPUv3 pod racks is smaller than the interval between the TPUv2 racks. However, like the TPUv2 pod, there are still no obvious storage components in the TPUv3 pod. The frame of TPUv3 is also higher to accommodate the added water cooling device.

Rack: TPUv2 (left) and TPUv3 (right)

Google moved the uninterruptible power supply from the bottom of the TPUv2 rack to the top of the TPUv3 rack. We assume that the large-volume metal box at the bottom of the rack now contains a water pump or other water-cooling related devices.

Raised floors are not used in modern hyperscale data centers. Google's racks are heavy before adding water, so they are placed directly on the concrete slab, and water enters and exits from the top of the rack. Google’s data center has a lot of elevated space, as shown in the photo of the TPUv3 pod. However, suspending the heavy water pipeline and determining the path must be an additional operational challenge.

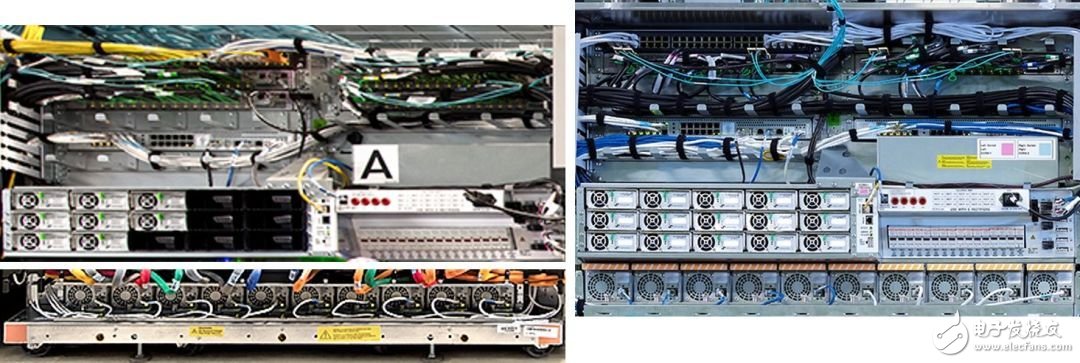

TPUv3 water connection (top left), water pump (bottom left, guess) and data center infrastructure on the rack (right)

Note that the stranded wire in front of the rack on the floor, just in front of the large metal box at the bottom of the rack, may be a humidity sensor.

Shelf and motherboard

Google not only doubled the density of computer racks, but also reduced the ratio of server motherboards to cloud TPUs from one-to-one to one-to-two. This will affect power consumption estimates because the server and cloud TPU of the TPUv3 pod will draw power from the same rack power source.

Google counts the server motherboard used by the current cloud TPU beta instance as an instance of the computing engine n1-standard-2 into its cloud platform public cloud, which has two virtual CPUs and 7.5 GB of memory. We think this is likely to be a mainstream dual-socket X86 server.

Recall that the TPUv2 pod contains 256 TPUv2 chips and 128 server processors. The TPUv3 pod will double the server processor and triple the number of TPU chips.

We believe that Google has over-provisioned servers in its TPUv2 pod. This is understandable for the new chip and system architecture. After adjusting the pod software for at least a year and making a small revision to the chip, reducing the number of servers in half may have a negligible impact on pod performance. There may be many reasons. Perhaps the server has no computing or bandwidth limitations, or Google may have deployed a new generation of Intel Xeon or AMD Epyc processors with more cores.

Cat 6 Lan Cable,Cat6 Ethernet Cable,Outdoor Cat6 Cable,Lan Cable Cat6

Zhejiang Wanma Tianyi Communication Wire & Cable Co., Ltd. , https://www.zjwmty.com